Soon you will be able to search by image for matches of places, stores or restaurants near you.

What is Google multisearch. Google multisearch lets you use your camera’s phone to search by an image, powered by Google Lens, and then add an additional text query on top of the image search. Google will then use both the image and the text query to show you visual search results.

What is near me multisearch. The near me aspect lets you zoom in on those image and text queries by looking for products or anything via your camera but also to find local results. So if you want to find a restaurant that has a specific dish, you can do so.

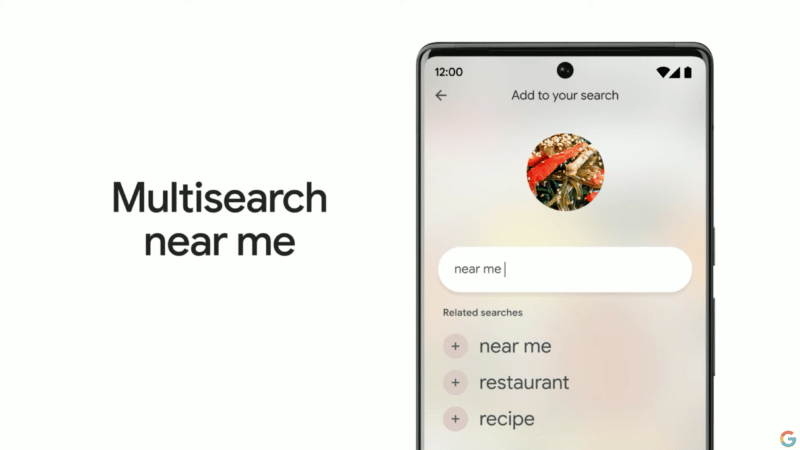

What multisearch near me looks like. Here is a screenshot followed by a GIF from the Google I/O keynote:

MUM not yet in multisearch. Google made a comment in its blog post saying “this is made possible by our latest advancements in artificial intelligence, which is making it easier to understand the world around you in more natural and intuitive ways. We’re also exploring ways in which this feature might be enhanced by MUM– our latest AI model in Search– to improve results for all the questions you could imagine asking.”

I asked Google if Google multisearch currently uses MUM and Google said no. For more on where Google uses MUM see our story on how Google uses artificial intelligence in search.

Available in US/English. Multisearch is live now and should be available as a “beta feature in English in the U.S.” Google said. But the near me flavor is not going live until later this year, Google said.

Why we care. As Google releases new ways for consumers to search, your customers may access your content on your website in new ways as well. How consumers access your content, be it desktop search, mobile search, voice search, image search and now multisearch – may matter to you in terms of how likely that customer might convert, where the searcher is in their buying cycle and more. This is now even more important for local businesses.